In a market saturated with digital products, the difference between success and obscurity often comes down to one thing: user experience. But how do you create an experience so seamless, so intuitive, that users don't just use your product, they champion it? The answer lies in mastering a set of core usability testing best practices. This isn't just about finding bugs; it's about understanding human behavior, uncovering hidden frustrations, and iteratively sculpting a product that feels like an extension of the user's own mind.

Moving beyond generic advice, this guide dives deep into eight proven, actionable strategies that will transform your testing from a simple checklist item into a powerful engine for growth. You will learn how to define sharp objectives, recruit the right participants, and design realistic tasks that reveal authentic user behavior. We will explore the nuances of observing without leading and analyzing data systematically to drive meaningful product improvements. Ultimately, the insights gained from rigorous usability testing are paramount for achieving true customer experience optimization.

This listicle provides a comprehensive framework for any team, from solo creators to large enterprises, aiming to build products that people love. Throughout this guide, we'll also show you how tools like BugSmash can streamline feedback collection, turning these best practices into an efficient, collaborative reality for your team. Prepare to move your product from 'good enough' to 'can't-live-without' by implementing these essential techniques.

1. Define Clear Research Questions and Objectives

Jumping into usability testing without a clear plan is like setting sail without a map or a destination. You might discover something interesting, but you're unlikely to reach a valuable, predetermined goal. This is why defining specific, measurable research questions and objectives is the cornerstone of all effective usability testing best practices. It transforms your testing from a vague exploration into a strategic, results-driven investigation.

This foundational step, championed by experts like Jakob Nielsen and Steve Krug, involves identifying precisely what you need to learn. These objectives guide every subsequent decision, from recruiting the right participants to designing relevant tasks and analyzing the findings for actionable insights.

Why It's a Top Practice

Without clear goals, you risk collecting a mountain of data that's interesting but ultimately unusable. You might observe users struggling but lack the context to understand why it matters to your business goals. Clear objectives ensure that your findings directly inform critical product decisions, justify design changes with concrete evidence, and align your entire team on what success looks like.

For instance, a team like Airbnb might set an objective to "identify the top three friction points in the booking process that lead to cart abandonment." This specific goal immediately clarifies what to test, what to measure (abandonment rate, time on task), and how to evaluate the results.

How to Implement This Practice

Turn ambiguity into clarity by framing your goals as answerable questions. A vague goal like "test the new checkout" becomes a powerful question: "Can users successfully complete a purchase using a gift card in under 90 seconds?"

Follow these actionable steps to set your study up for success:

- Limit Your Focus: Concentrate on 3-5 core research questions per study. Trying to answer everything at once will dilute your focus and complicate your analysis.

- Create a Research Brief: Document your objectives, target user profiles, key tasks, and success metrics in a one-page brief. This document becomes your team's single source of truth.

- Share and Align: Circulate the research brief with all stakeholders, including designers, developers, and product managers, before testing begins. This ensures everyone is aligned and provides an opportunity for valuable feedback.

Key Insight: Well-defined objectives act as a filter, helping you separate signal from noise during analysis. You'll know exactly which user behaviors and quotes are relevant to your goals, making your final report more focused and impactful.

2. Recruit Representative Users

Testing a language-learning app with people who aren't interested in learning a language will yield useless feedback. Similarly, testing complex financial software with tech novices will only tell you it's complex, not how to improve it for its intended expert audience. Recruiting participants who accurately represent your target users is one of the most critical usability testing best practices for generating valid and actionable insights.

This principle, championed by human-centered design leaders like Dana Chisnell and platforms like UserTesting.com, emphasizes that the quality of your participants directly determines the quality of your results. You must identify the key demographic, behavioral, and psychographic traits of your ideal customers and find people who match that profile.

Why It's a Top Practice

Using the wrong participants is a surefire way to invalidate your entire study. Their feedback, struggles, and successes won't reflect how your actual customers will experience the product. This leads to misguided design decisions, wasted development cycles, and a product that misses the mark. Representative users provide relevant context, ensuring the problems you identify are the ones that truly matter to your business.

For example, Duolingo would benefit from recruiting a mix of users: absolute beginners, intermediate learners trying to maintain a streak, and polyglots. Each group has unique motivations and pain points, and testing with all of them provides a holistic view of the user experience.

How to Implement This Practice

Move beyond simple demographics and focus on behaviors and mindsets. Instead of just recruiting "males aged 25-40," target "frequent online shoppers who have abandoned a cart in the last month." This behavioral focus ensures participants can relate to the tasks you present.

Follow these actionable steps for quality recruitment:

- Create Detailed Screener Surveys: Design a short survey with qualifying questions (e.g., "How often do you use creative software?") and disqualifying ones (e.g., "Do you work for a competing software company?") to filter for the right people.

- Use Multiple Recruitment Sources: Don't rely on a single channel. Combine sources like professional recruiting panels, social media groups, and your own customer email lists to find the best fit. Exploring various methods for how to collect customer feedback on bugsmash.io can also reveal new recruitment avenues.

- Plan for No-Shows: Life happens. A 20-30% no-show rate is common in user research, so always over-recruit to ensure you hit your target number of sessions.

Key Insight: The goal isn't to find "perfect" users, but representative ones. A small group of 5-7 well-recruited participants will reveal more valuable insights about your product's core usability than 50 randomly selected people.

3. Use Realistic Tasks and Scenarios

The most insightful usability tests are those that reflect reality. Asking a participant to "click the blue button" tells you nothing about their natural behavior. Creating tasks that mirror real-world user motivations and contexts is how you uncover authentic usability issues. This is why using realistic tasks and scenarios is one of the most crucial usability testing best practices. It’s the difference between testing in a sterile lab and observing behavior in the wild.

This approach, advocated by usability pioneers like Caroline Jarrett and Ginny Redish, involves giving participants a goal and a story, not a set of instructions. By providing context, you allow users to tap into their own problem-solving skills, revealing the true intuitiveness (or confusion) of your design.

Why It's a Top Practice

Artificial tasks yield artificial results. If you lead participants with overly specific instructions, you are essentially guiding them through a flow, not testing if they can navigate it on their own. Realistic scenarios, on the other hand, reveal genuine friction points, workarounds, and moments of delight because they simulate the user’s actual decision-making process.

For example, a scenario for Uber like, "You're running late for an important meeting downtown and need to get there as quickly as possible," tests more than just button clicks. It tests the user’s ability to handle stress, evaluate options like "Priority Pickup," and quickly input a destination under pressure, providing far richer data.

How to Implement This Practice

Transform your task list into a compelling narrative that gives users a reason to act. Instead of "Find a product," create a scenario: "Find and purchase a birthday gift for your 8-year-old nephew who loves dinosaurs, with a budget of $25."

Follow these actionable steps to design effective scenarios:

- Ground in Reality: Base your scenarios on actual user research, customer support tickets, and analytics data. What are the most common goals your users are trying to achieve?

- Add Context, Not Cues: Provide enough detail to make the scenario relatable but avoid giving away the solution. Include emotional context or time pressure if it’s relevant to the real-world use case.

- Pilot Test Your Tasks: Before running the study with actual participants, test your scenarios with colleagues. This helps you refine the wording and ensure the tasks are clear, unambiguous, and don't accidentally lead the user.

Key Insight: Realistic scenarios uncover the "why" behind user actions. When a user succeeds or fails, their attempt is rooted in a genuine goal, making their feedback and behavior exponentially more valuable for informing design decisions.

4. Observe and Document Behavior, Not Just Feedback

There is often a significant gap between what users say they will do and what they actually do when faced with a live interface. Relying solely on participant opinions can be misleading, as users may want to be polite or may not accurately recall their own thought processes. This is why one of the most critical usability testing best practices is to prioritize the observation of behavior over verbal feedback. It’s the difference between asking someone if they like a car and watching them struggle to adjust the mirrors.

This principle, championed by UX pioneers like Don Norman and Jakob Nielsen, emphasizes that user actions, hesitations, errors, and unconscious workarounds are the most reliable indicators of usability problems. Observing these behaviors provides unfiltered, objective evidence of where your design is succeeding and where it is failing.

Why It's a Top Practice

Stated preferences are often influenced by social desirability bias, poor memory, or a simple lack of self-awareness. Actions, however, are raw, honest data. When a user says a task was "easy" but you observed them clicking the wrong button three times and sighing in frustration, which data point is more valuable? The behavioral data reveals the real friction points that need fixing.

For example, tech giants invest heavily in behavioral observation. Apple meticulously observes finger placement and gesture patterns during iPhone testing to refine touch interactions, while Microsoft tracks mouse movements and click hesitations to identify confusing elements in its Office suite. These observations lead to data-driven design improvements, not just opinion-based ones.

How to Implement This Practice

Transform your team from survey-takers into keen observers. The goal is to systematically capture what happens during a test, not just what is said after. This requires a structured approach to observation to ensure consistency and reliability.

Follow these actionable steps to focus on behavior:

- Use Observation Templates: Create a standardized sheet or template for note-takers. Include columns for the task, expected action, observed action, errors, user expressions, and direct quotes.

- Record Every Session: Always get consent to record video and screen activity. This allows you to revisit crucial moments, analyze non-verbal cues you might have missed, and create highlight reels for stakeholders.

- Note Timestamps: During observation, instruct note-takers to log timestamps for significant events (e.g., "2:15 – User hesitates for 10 seconds before clicking 'Next'"). This makes finding key moments in recordings incredibly efficient.

- Combine Methods: Use behavioral observation during the task, then follow up with a post-task interview. Ask questions like, "I noticed you paused on this screen for a moment. Can you tell me what you were thinking there?" This links the "what" (behavior) with the "why" (user cognition).

Key Insight: User actions are the source of truth in usability testing. While feedback is useful for context, behavioral data provides undeniable evidence of usability issues that must be addressed to improve the user experience.

5. Maintain Neutrality and Avoid Leading Participants

The most valuable usability testing insights come from genuine, uninfluenced user behavior. As a moderator, your primary role is to be a neutral observer, creating a safe space where participants act naturally. Leading a participant, even unintentionally, can contaminate your results, causing users to behave in ways that please you rather than how they would in the real world. Mastering neutrality is one of the most critical usability testing best practices for collecting authentic data.

This principle, heavily emphasized by usability pioneers like Steve Krug and the Nielsen Norman Group, is about preventing your own biases and expectations from influencing the session. Your goal is to understand the user's mental model, not guide them toward a predetermined "correct" path.

Why It's a Top Practice

Leading questions or affirmative reactions can introduce social desirability bias, where participants give answers they believe you want to hear. If you ask, "That new feature is easy to use, right?" most people will agree to avoid seeming incompetent or disagreeable. This creates false positives and can lead your team to ship a flawed design, confident that it works perfectly.

True neutrality uncovers the real user experience. For example, a Slack researcher who avoids saying "Great!" after a user completes a task, and instead asks "Tell me what you were thinking as you did that," gets a much richer, more honest look into the user's thought process and potential hesitations.

How to Implement This Practice

Transform yourself from a guide into a neutral co-explorer. Your language and body language should encourage honesty, not seek confirmation. The difference between asking "Can you find the search button?" and "How would you look for a specific product?" is profound; the first is a test of compliance, while the second reveals natural user behavior.

Follow these actionable steps to ensure your sessions are unbiased:

- Prepare Neutral Phrases: Have a list of go-to, open-ended phrases ready. Instead of "Is that what you expected?", try Shopify’s moderator approach of asking, "What are you thinking now?" or "Talk me through what you see here."

- Practice Active Listening: Focus entirely on what the user is saying and doing. Avoid formulating your next question while they are still talking. Use neutral acknowledgments like "I see" or "Uh-huh" to show you're listening without conveying approval or disapproval.

- Embrace the Silence: When a user gets quiet or struggles, resist the urge to jump in immediately. Productive silence often precedes a key insight as the participant works through their thought process aloud. Let them struggle briefly before offering help.

Key Insight: Your job is not to be the user's friend or a product demonstrator. It's to be a researcher. By maintaining strict neutrality, you ensure the findings you report back to your team are an accurate reflection of the user experience, not a reflection of your own influence.

6. Test Early and Often Throughout Development

Treating usability testing as a one-time, final validation step before launch is a recipe for expensive, last-minute disasters. The most effective product teams embrace a continuous testing mindset, integrating user feedback throughout the entire development lifecycle. This practice of testing early and often is a cornerstone of usability testing best practices, transforming the process from a final exam into an ongoing conversation.

This iterative approach, central to methodologies like Lean UX and Google's Design Sprint process, involves testing everything from low-fidelity concepts to fully functional features. It creates a continuous feedback loop that catches design flaws before they are set in code, saving countless hours of development rework and ensuring the final product truly meets user needs.

Why It's a Top Practice

Waiting until the end to test means that any discovered usability issues are deeply embedded in the product's architecture, making them significantly more difficult and costly to fix. Early testing allows teams to make small, iterative improvements, pivot based on real user behavior, and build confidence in the design direction at every stage.

For example, a team like Spotify can test multiple music discovery concepts using simple paper prototypes before a single line of code is written. This low-cost feedback allows them to quickly discard weak ideas and double down on promising ones, ensuring development resources are invested wisely. This approach is a core element of many successful quality assurance testing techniques.

How to Implement This Practice

Integrate testing directly into your development sprints and project timelines. The goal is to make user feedback a routine part of your workflow, not a special event. Instead of one large study, aim for a series of smaller, faster tests aligned with development milestones.

Follow these actionable steps to build a culture of continuous testing:

- Align Testing with Sprints: Schedule short, recurring usability sessions at key points in your development cycle, such as the end of a sprint, to test what was just built.

- Vary Your Fidelity: Use lo-fi methods like paper prototypes or wireframe tests for early concepts. As the design matures, transition to interactive prototypes and eventually the live product.

- Democratize Insights: Create a streamlined process for sharing key findings quickly. Post video clips and top takeaways in a shared Slack channel or a brief email to ensure developers and designers see the impact of their work immediately.

Key Insight: Continuous testing de-risks product development. Each small test provides a new layer of evidence, ensuring that major design and development investments are based on validated user needs, not just internal assumptions.

7. Analyze Data Systematically and Share Findings Effectively

Raw observations from usability tests are just data points; they only become valuable when they are systematically analyzed and communicated to drive change. Simply collecting feedback is not enough. The process of transforming qualitative and quantitative observations into prioritized, actionable insights is one of the most critical usability testing best practices. Without it, your research efforts will fail to influence product strategy or improve the user experience.

This crucial step, championed by UX research leaders like Jared Spool and Erika Hall, involves organizing your data, identifying cross-participant patterns, and presenting the findings in a way that resonates with stakeholders and compels them to act.

Why It's a Top Practice

Effective analysis prevents "data paralysis," where teams are overwhelmed by observations but unsure what to fix first. A systematic approach ensures that you prioritize issues based on their actual impact on the user and the business, not just on the loudest voice in the room. This moves the conversation from opinion-based debates to evidence-backed decisions.

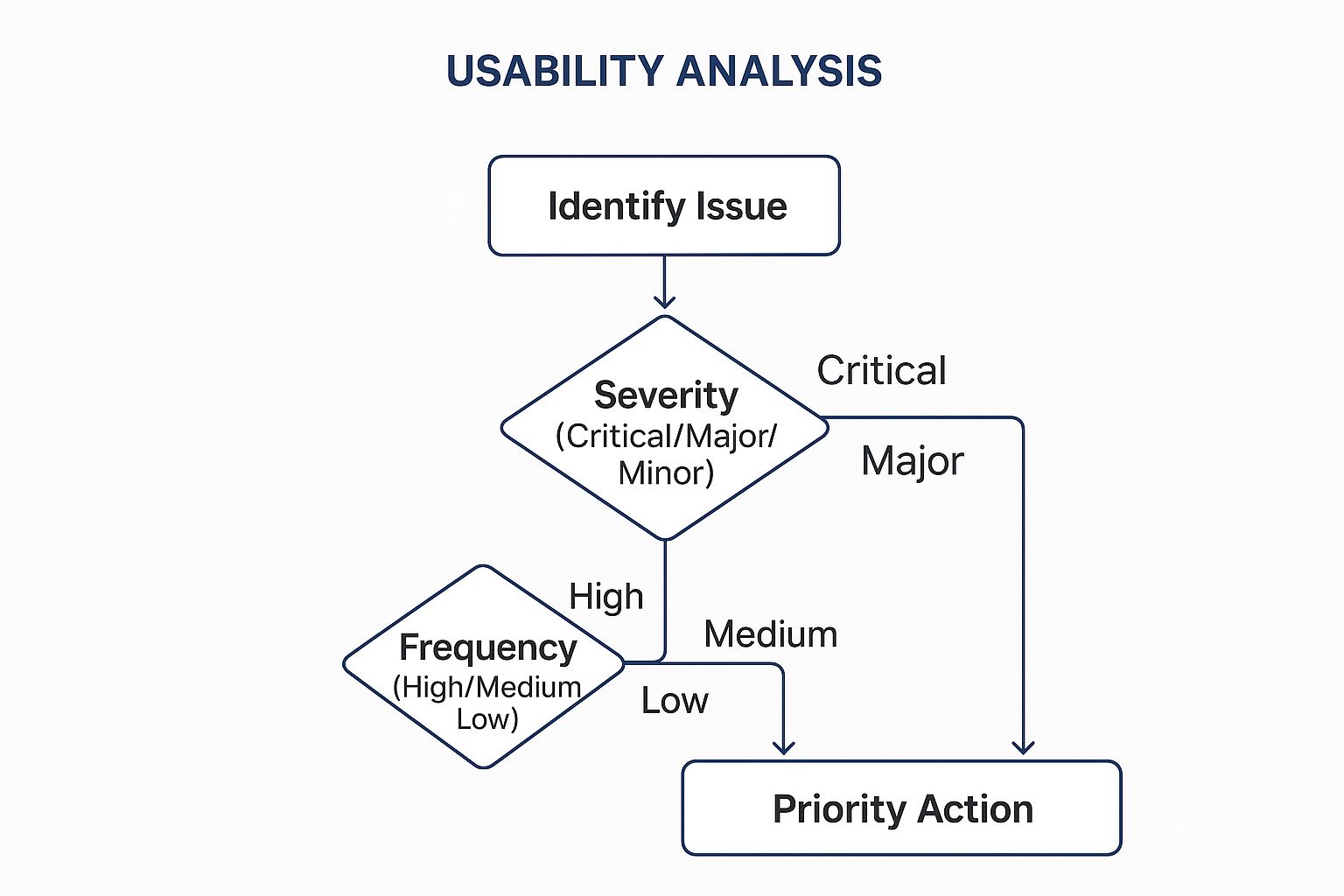

For example, a team at IBM might create a compelling video highlight reel showcasing the top five user struggles, which is far more persuasive than a dry spreadsheet of notes. Similarly, Facebook's method of applying severity ratings (like critical, major, minor) and frequency counts allows them to triage bugs and usability flaws with precision, ensuring engineering resources are spent on what matters most.

This decision tree infographic illustrates how to prioritize issues by evaluating their severity and frequency.

The flowchart shows that an issue's final priority is a direct result of combining how severe the problem is with how often it occurs, guiding teams to focus on high-impact, high-frequency problems first.

How to Implement This Practice

Turn raw notes into a powerful narrative by establishing your analysis framework before you even start testing. This ensures you are capturing data in a consistent format that simplifies later synthesis.

Follow these steps for impactful analysis and reporting:

- Use an Analysis Template: Create a spreadsheet or a dedicated tool to log observations, participant quotes, task success rates, and severity ratings for each participant. Consistency is key.

- Prioritize with a Matrix: Use a prioritization matrix that plots issue severity against frequency. This provides a clear, data-driven visual for deciding what to tackle in the next design sprint.

- Tell a Story with Your Data: Don't just present problems; present solutions. For each identified issue, include direct user quotes, video clips, and a clear, actionable recommendation. Learn more about how to visualize feedback effectively on bugsmash.io.

Key Insight: The goal of a usability report is not just to inform but to persuade. Framing your findings as a story with a clear problem, compelling evidence (quotes and clips), and a proposed solution makes your recommendations far more likely to be implemented.

8. Choose Appropriate Testing Methods for Your Context

Selecting a usability testing method isn't a one-size-fits-all decision; it’s a strategic choice that can dramatically influence the quality and relevance of your insights. Just as a carpenter chooses a specific saw for a specific cut, a UX researcher must select the right testing approach based on research goals, timeline, and product maturity. This is why choosing the appropriate method is a fundamental usability testing best practice. It ensures that the feedback you gather is not only accurate but also efficient and impactful for your specific situation.

This practice, advocated by diverse experts like Steve Krug for its agile applications and Jeff Rubin for its formal rigor, involves understanding the trade-offs between different methodologies. From a formal, moderated lab study to quick, informal guerrilla testing, your choice dictates the type of data you'll collect and the resources you'll need.

Why It's a Top Practice

Using the wrong method can be costly and misleading. Running an elaborate, expensive lab study to validate a simple button placement is inefficient. Conversely, relying on quick guerrilla tests for a complex medical device requiring FDA compliance would be dangerously insufficient. Matching the method to your context ensures you invest your resources wisely and generate findings that are both valid and actionable for your specific stage of development.

For example, a company like Zoom might use remote unmoderated testing to gather feedback from thousands of global users on a new feature, while a local coffee shop could use guerrilla testing at their counter to quickly validate their new mobile ordering app. Each method is perfectly suited to the context, scale, and goals of the organization.

How to Implement This Practice

To make the right choice, you must weigh your needs against the strengths of each method. A formal lab study offers control and deep insights but is slow and expensive. Remote unmoderated testing is fast and scalable but lacks the real-time probing of a moderator.

Follow these actionable steps to select the best method for your study:

- Create a Decision Matrix: Chart your options against key factors like research goals (exploratory vs. evaluative), budget, timeline, and the required level of scientific rigor. This visual aid makes the best choice obvious.

- Consider Hybrid Approaches: Don’t limit yourself to one method. Combine a quantitative unmoderated test to identify what the problems are with a qualitative moderated session to understand why they happen.

- Pilot Your Chosen Method: Before launching a large-scale study, run a small pilot test with 1-2 participants. This helps you identify any issues with your tasks, tools, or overall approach, saving you from major problems down the line.

Key Insight: The "best" usability testing method is the one that gets you the most reliable and relevant answers to your specific research questions within your existing constraints. The goal is progress, not methodological perfection.

Usability Testing Best Practices Comparison

| Item | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Define Clear Research Questions and Objectives | Moderate – requires upfront planning and clear goal setting | Low to moderate – mainly time investment in preparation | Focused, actionable insights aligned with goals | Studies needing targeted insights & stakeholder alignment | Prevents scope creep, maximizes ROI |

| Recruit Representative Users | High – detailed screening and recruitment needed | High – time-consuming and potentially costly | Valid, relevant, unbiased findings | Research requiring authentic user representation | Reduces bias, increases confidence |

| Use Realistic Tasks and Scenarios | Moderate to high – requires deep user understanding | Moderate – effort in task creation and validation | Genuine usability problems & natural behavior insights | Testing realistic usage patterns and motivations | Reveals true issues, improves engagement |

| Observe and Document Behavior, Not Just Feedback | High – skilled observation and multiple data capture methods | High – needs recording tools and trained observers | Objective usability insights beyond self-report | Research emphasizing actual user behavior | Reveals subconscious issues, objective data |

| Maintain Neutrality and Avoid Leading Participants | Moderate – requires skilled moderation and training | Moderate – moderator preparation and practice | Reliable, unbiased user feedback | Moderator-led sessions to avoid bias | Produces accurate, authentic findings |

| Test Early and Often Throughout Development | High – ongoing integration across development stages | High – continuous resource and coordination | Early detection and iterative improvements | Agile or iterative development environments | Reduces cost, fosters user-centered design |

| Analyze Data Systematically and Share Findings Effectively | High – requires structured frameworks and skill | Moderate to high – time and analytical skills needed | Actionable, prioritized insights that drive decisions | Projects needing clear communication of results | Transforms data into recommendations |

| Choose Appropriate Testing Methods for Your Context | High – requires knowledge and decision-making frameworks | Moderate to high – varies with method chosen | Optimized impact and efficient resource use | Matching method to goals, timeline, and budget | Maximizes impact, ensures appropriate rigor |

Putting Theory into Practice: Your Next Steps in Usability Mastery

We've explored the foundational pillars of effective usability testing, from defining crystal-clear objectives to systematically analyzing and sharing your findings. The journey from novice to expert is not about memorizing a checklist; it's about internalizing a user-centric mindset. Mastering these usability testing best practices transforms how you build products, shifting the focus from assumptions to evidence-based decisions. This is the bedrock of creating experiences that don't just function, but truly delight and retain users.

Remember, each practice we discussed is interconnected. Recruiting the right participants is pointless without realistic tasks. Observing behavior is only half the battle if your analysis is flawed. The true power emerges when these elements work in concert, creating a continuous feedback loop that fuels innovation and de-risks your development process.

From Knowledge to Action: Your Implementation Roadmap

Reading about best practices is the first step, but real growth happens when you apply them. Don't feel overwhelmed by the need to implement everything at once. Instead, focus on incremental improvements. Here is a practical roadmap to get you started:

- For Your Very Next Test: Pick just one or two practices to focus on. Perhaps it’s refining your scenarios to be more realistic or practicing active listening to avoid leading participants. Small, focused changes are easier to adopt and build momentum.

- Within the Next Month: Audit your entire process. How are you recruiting users? Is your data analysis truly systematic? Identify the weakest link in your usability testing chain and dedicate resources to strengthening it. This is where you start building a robust, repeatable system.

- Over the Next Quarter: Your goal is to embed these practices into your team's culture. Make "test early, test often" a non-negotiable part of your workflow. Create templates for test plans and reports to standardize your approach and ensure consistency. The aim is to make high-quality usability testing the default, not the exception.

The Lasting Impact of True User Empathy

Ultimately, embracing these usability testing best practices is about more than just fixing UI flaws or reducing bounce rates. It’s about building a profound sense of empathy for the people you serve. When you watch a user struggle with a task you thought was simple, it fundamentally changes your perspective. You stop seeing your product as a collection of features and start seeing it through their eyes, as a tool meant to solve a problem or fulfill a need.

This empathetic approach has a ripple effect across your entire organization. It leads to better-informed product roadmaps, more persuasive marketing copy, and a shared passion for creating genuine value. It is the competitive advantage that cannot be easily replicated. To further your usability mastery, exploring various tools and resources, such as those potentially offered by tnote for Usability Mastery, can be beneficial in expanding your toolkit and perspectives.

Adopting a rigorous testing methodology moves your team from a reactive state of fixing bugs to a proactive state of building user loyalty. The insights you gain become the north star guiding your strategy, ensuring that every development cycle brings you closer to a product your audience will champion. This is your path to not just building a successful product, but building a beloved brand.

Ready to streamline your feedback process and supercharge your usability testing? BugSmash centralizes annotations on websites, videos, and images, turning scattered feedback into actionable insights. Stop chasing screenshots and start collaborating effectively. See how a dedicated platform can help you implement these usability testing best practices with ease.