Let's be real—a quality review process is supposed to be the engine that drives product excellence. It’s a structured way to check work—code, designs, you name it—to make sure it hits the mark for accuracy, consistency, and function.

When it works, it’s a beautiful thing. But when it doesn’t? It’s a time-consuming bottleneck that creates friction and slows everything to a crawl.

Why Most Quality Review Processes Falter

For too many teams, the quality review process feels more like a roadblock than a valuable checkpoint. It’s often treated as a necessary evil that grinds delivery to a halt, introduces friction, and leaves everyone feeling frustrated. The problem usually isn’t the people; it’s the clunky, outdated process they're forced to wrestle with.

You’ve probably seen these broken workflows in action. They’re a chaotic mess of spreadsheets for tracking, never-ending email chains for feedback, and a storm of Slack pings for anything "urgent." The result is a system where nothing is centralized and context gets lost in the shuffle.

The True Cost of Inconsistent Feedback

When feedback is all over the place, developers are left trying to read minds. One reviewer might get hung up on minor style choices while another flags major architectural flaws—sometimes on the very same piece of work. Without a single source of truth, developers waste precious time trying to reconcile conflicting advice or, even worse, just start ignoring feedback.

This isn't just an internal headache; it creates real business problems:

- Zombie Bugs: Issues you thought were "fixed" have a nasty habit of coming back to life in later releases. Why? Because the root cause was never properly addressed during a shallow, inconsistent review.

- Wasted Developer Hours: A huge chunk of a developer’s time gets eaten up by rework, deciphering vague feedback, and manually chasing down the status of a review. It's not uncommon for developers to spend up to 40% of their time on work that a better process could have prevented entirely.

- Sinking Team Morale: The constant back-and-forth and subjective criticism can feel personal. It slowly turns what should be a collaborative effort into an adversarial relationship, killing motivation and teamwork.

The biggest flaw in any quality review process is ambiguity. When quality isn't clearly defined and feedback isn't standardized, the whole system defaults to chaos. That chaos costs far more in lost productivity than you'd ever save by cutting corners.

Manual Tracking Is a Recipe for Failure

Let's talk about the other major pain point: manual tracking. Picture a product manager trying to get a status update on a critical feature. Their day becomes a scavenger hunt—digging through a spreadsheet, pinging the assigned reviewer on Slack, then trying to find that one specific email with the latest comments. It’s wildly inefficient and practically designed for errors.

This manual overhead doesn't just slow things down; it creates massive blind spots. Without a central dashboard, it's nearly impossible to spot bottlenecks. Is one team constantly buried in reviews? Is a certain type of task always getting stuck in limbo? Good luck figuring that out without spending hours crunching data no one has time for.

The consequences start to pile up. Missed deadlines become the norm. Product quality drops as bugs slip through the cracks, leading directly to customer complaints and churn. In the end, a broken quality review process isn't just an inconvenience—it’s a direct threat to your customer satisfaction and your bottom line. The process itself becomes the biggest bug of all.

How To Design a Modern Review Framework

Let's be honest, moving from the chaos of inconsistent feedback to the clarity of a well-oiled machine feels like a huge leap. But it all starts with a solid blueprint. Architecting a modern quality review process isn’t about forcing rigid rules on your team. It's about creating a framework that fits their natural rhythm and actually helps them produce their best work. This is the critical shift from a reactive, "bug-hunting" mindset to a proactive culture of quality.

The first step is foundational, yet it's the one most teams skip: defining what "quality" actually means for your product. Seriously. Is it a complete absence of bugs? Pixel-perfect UI alignment across all devices? Strict adherence to the brand's tone of voice? Without a shared definition, everyone is aiming at a different target.

This definition becomes your North Star, guiding every single decision within your review framework. It has to be specific, measurable, and crystal clear to everyone, from the newest developer to senior product leaders.

Establish Crystal-Clear Roles and Responsibilities

Once you know what you're aiming for, you have to define who is responsible for what. One of the biggest sources of friction I've seen in review cycles is simple ambiguity over ownership. When roles get blurry, important tasks get dropped and accountability vanishes.

In a modern setup, every person involved has a distinct purpose. This neatly sidesteps the classic "too many cooks in the kitchen" problem, where feedback becomes contradictory and just plain overwhelming. For instance, a designer's review should zero in on visual consistency and user experience, while an engineer's review validates code integrity and performance.

The end goal is to build a system where team members can genuinely trust each other's expertise. A developer shouldn't have to second-guess design feedback, and a designer should feel confident in the technical implementation. That clarity is the secret sauce for a smooth and efficient workflow.

A well-designed review framework doesn't just catch errors; it builds trust. When everyone knows their role and what's expected of them, the process transforms from a hurdle into a collaborative checkpoint that strengthens the final product.

To bring this to life, you need to define these key roles within your workflow. Here is a simple breakdown of how responsibilities can be structured, especially when using a central hub like BugSmash, to ensure nothing falls through the cracks.

Key Roles in a Modern Quality Review Workflow

A breakdown of responsibilities to ensure clarity and accountability at each stage of the review process.

| Role | Primary Responsibility | Key Tool Usage in BugSmash |

|---|---|---|

| The Creator (e.g., Developer, Designer) | To produce the initial work and ensure it meets the initial project requirements and quality definition. | Uploading assets (websites, videos, images) for review and addressing annotated feedback directly. |

| The Peer Reviewer (e.g., Fellow Engineer) | To check for technical accuracy, adherence to coding standards, and immediate functional issues. | Leaving specific, actionable comments and annotations on the work, tagging other relevant team members. |

| The Subject Matter Expert (SME) | To validate the work against business logic, user needs, or strategic goals. | Using custom checklists in BugSmash to ensure all high-level requirements are met before approval. |

| The Final Approver (e.g., Product Manager) | To give the final sign-off, confirming the work is ready for the next stage or for release. | Changing the review status within BugSmash to "Approved" to trigger the next step in the workflow. |

By clearly assigning these roles, you create a chain of accountability that not only speeds up the process but also improves the quality of the feedback at every turn.

Build Standardized Checklists and Workflows

With roles clearly defined, the next move is to standardize the review itself. This is where you mercilessly eliminate guesswork and subjectivity. Instead of relying on memory, teams should be using standardized checklists tailored to different types of reviews. A great starting point for developers, for instance, is a comprehensive ultimate code review checklist, which provides a solid foundation for consistency.

These checklists, which you can build right into a platform like BugSmash, are your secret weapon for making sure every review is both comprehensive and consistent.

- For a Design Review: Your checklist might include items for brand guideline adherence, accessibility standards (WCAG), and responsive behavior.

- For a Content Review: You could check for tone of voice, grammatical accuracy, and SEO keyword inclusion.

- For a Code Review: The list might cover security vulnerabilities, performance optimization, and documentation standards.

These standardized elements are absolutely crucial. They ensure every piece of work gets evaluated against the same high bar, no matter who is doing the review. This consistency is the backbone of any quality process that's built to scale. It’s part of a bigger industry shift, where the quality review process is no longer just about catching errors at the end. Instead, it's becoming a core, integrated part of the entire value chain, ensuring quality is a continuous, built-in habit.

Putting Your New Process Into Action

So you've designed a brilliant framework. That's a huge step. But a plan on paper is only as good as its execution, and this is where many teams get tripped up. Let's walk through how to actually implement your new quality review process using BugSmash, turning that blueprint into a machine that just works.

Moving away from the chaos of scattered spreadsheets and endless email chains can feel like a massive undertaking. I get it. The key isn't to flip a switch overnight and hope for the best. Instead, we'll focus on a phased, deliberate rollout that builds momentum, gets your team on board, and smooths out the rough edges before you go all-in.

Launch a Pilot Project First

Before you overhaul everyone's workflow, start small. Pick a single, non-critical project to act as your pilot. This is your chance to test the new process in a low-risk environment—think of it as a shakedown cruise for your new system.

For instance, you could choose a small internal tool update or a new marketing landing page. Your pilot group—maybe just one developer, a designer, and a product manager—will be the first to use your BugSmash setup. Their direct experience is pure gold; it gives you invaluable, real-world feedback to refine everything before anyone else jumps in.

This approach pays off in a few critical ways:

- Finds Hidden Bottlenecks: You might discover a specific review stage takes way longer than you thought, or that a checklist item is confusing.

- Creates Your First Fans: The pilot team becomes your internal champions. When they have a great experience, their success story makes the wider rollout so much easier.

- Refines Your BugSmash Setup: It's the perfect time to tweak notification settings, custom fields, and workflow triggers based on how people actually use the tool.

A pilot project isn’t a delay—it’s an accelerator. The insights you gain from a small-scale test will save you from making large-scale mistakes, ensuring your full launch is a success from day one.

Once the pilot is a success, you'll have a proven model and a group of enthusiastic evangelists ready to help their peers get on board.

Configure Your Automated Workflows

This is where the magic happens. The real power of your new process comes from automation, where you eliminate the manual, soul-crushing tasks that used to slow everyone down. In BugSmash, you can set up automation rules that handle the admin work, freeing up your team to focus on quality, not just coordination.

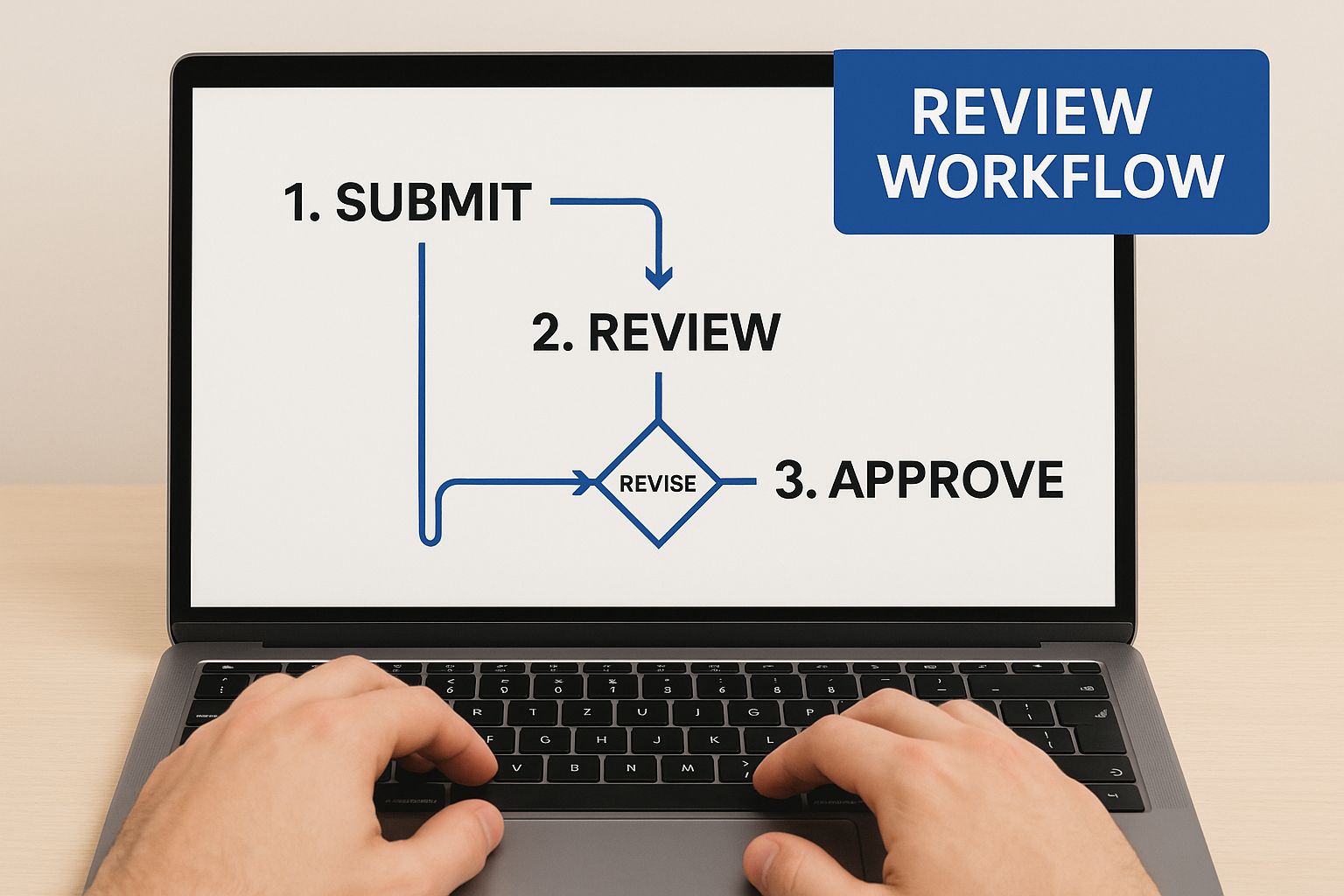

This visual shows a pretty standard workflow, but what you should really see is that every handoff is an opportunity for smart automation.

The takeaway here is that you can use automation to keep the momentum going and ensure nothing falls through the cracks. Instead of manually pinging people, set up rules to do it for you. To truly embed this process, leveraging technology is key; this article shares some powerful business process automation examples that can seriously boost efficiency.

Setting Up Different Review Types

Your quality review process can't be one-size-fits-all. A code review has totally different needs than a design critique or user acceptance testing (UAT). BugSmash is built for this, letting you create distinct workflows and checklists for each type of review, all under one roof.

Here’s a practical way to think about structuring them:

1. Code Reviews

Set up a project template just for the engineering team. Your automation rule could be something like: "When a developer moves a ticket to 'Ready for Review,' automatically assign the team's lead engineer and apply the 'Code Review Checklist.'" This guarantees your technical standards are checked, every single time.

2. Design Critiques

With design, the focus shifts to visual feedback and the user experience. A good workflow might be: "When a designer uploads a new mockup, notify the Product Manager and the front-end dev lead." This gets the right eyes on it early in the process. For a deeper dive, our guide on online proofing mastery can help transform your content review process with specific annotation tips.

3. User Acceptance Testing (UAT)

This is the critical final gate. You can create a workflow where deploying a feature to staging automatically triggers a review request for the QA team and key business stakeholders. Once they give their approval, the status can automatically change to "Ready for Production."

By tailoring the process to the type of work being done, you make sure reviews are relevant and effective, not just a generic box-ticking exercise. This is how you move from a process on paper to a dynamic, everyday reality that genuinely improves quality at every step.

Using Analytics To Drive Continuous Improvement

A quality process that doesn't evolve is one that's already failing. Real excellence isn't just about catching mistakes; it's about using data to get better every single day. This is how you transform your review cycle from a static gate into a dynamic feedback loop that proactively prevents problems before they start.

Think of a platform like BugSmash as more than just a task manager—it’s a data-generating machine. The analytics features give you a real-time health check on your entire quality review process. You get to see exactly what's working and, more importantly, what isn't. It’s time to move beyond gut feelings and start making decisions backed by cold, hard numbers.

Identify The KPIs That Actually Matter

Before you can improve your process, you have to measure it correctly. Forget vanity metrics. You need to focus on key performance indicators (KPIs) that directly reflect the efficiency and effectiveness of your team's workflow. These numbers tell the real story.

Inside BugSmash, you can spin up custom dashboards to track the metrics that are most relevant to your goals. Here are a few essentials I always recommend starting with:

- Review Cycle Time: How long does it take for a task to go from the review queue to final approval? A consistently high or climbing cycle time is a classic sign of a bottleneck somewhere in your process.

- Bug Escape Rate: This tracks the number of issues that slip past your review and make it into the live product. It’s the ultimate report card for your process's effectiveness.

- Feedback Implementation Rate: What percentage of feedback comments are actually addressed? A low rate could mean the feedback is unclear, irrelevant, or that your developers are simply swamped.

- Rejection Rate: This is the percentage of reviews sent back for major revisions. A high rejection rate often signals a problem much earlier in the cycle, like unclear initial requirements.

These metrics aren't just numbers on a screen; they are powerful diagnostic tools. They help you ask smarter questions to get to the root cause of recurring headaches.

Pinpoint Bottlenecks With Custom Dashboards

Once you’re tracking the right KPIs, the real magic happens when you visualize them. A generic, one-size-fits-all report is fine, but a custom dashboard in BugSmash tailored to your team's specific needs is where the insights truly live.

For instance, imagine your dashboard shows that the average Review Cycle Time has crept up by 20% over the last month. That’s a clear problem, but the real value comes from digging deeper. You can create widgets that break down that cycle time by:

- Team or Department: Is the slowdown specific to engineering, or is the design team also feeling the crunch?

- Reviewer: Is one overloaded person holding up multiple projects?

- Task Type: Are code reviews flying through while documentation reviews are left to languish for weeks?

This level of detail transforms a vague complaint ("reviews are too slow") into an actionable insight ("our documentation reviews lack a clear owner, which is causing delays"). From there, you can take targeted action, like assigning a dedicated documentation reviewer or clarifying responsibilities for that task type.

The goal of analytics isn't just to report data; it's to provoke action. A good dashboard doesn’t just show you what happened—it shows you where to look next to make things better.

Drive Strategic Decisions With Data

With these clear insights in hand, you can finally make informed, strategic improvements to your quality review process. This is where your process becomes a living system that continuously adapts and improves.

Let's say your data reveals that a high number of visual defects are slipping through on website updates. That insight could lead you to improve how your team collects feedback for websites by creating a mandatory "Visual QA Checklist" right within BugSmash. This is a proactive change, born from data, that directly addresses a weak point in your system.

This reliance on data-driven quality is a growing trend. By 2025, the global quality management software market is expected to surge from $8.68 billion to $20.83 billion. And with 60% of manufacturers confirming that a robust system boosts compliance, it's clear that investing in this kind of technology is becoming a competitive necessity.

When you regularly review your analytics and make these kinds of data-backed tweaks, you create a powerful cycle of continuous improvement. Your quality review process stops being a rigid, static procedure and becomes one of your organization's greatest strategic assets.

Advanced Strategies For a World-Class Quality Culture

So, you've got a solid, data-driven framework humming along. Your quality review process is catching bugs and keeping things running smoothly. That's great. But if you want to move from a good engineering organization to a truly great one, it's time to think bigger.

The real magic happens when you build a genuine culture of quality—an environment where excellence isn't just a box to check, but a shared value that’s woven into every conversation, decision, and team ritual.

Make Reviews a Mentoring Opportunity

One of the most powerful, and often overlooked, aspects of any review is its potential as a mentoring tool. It’s easy to just point out what’s wrong. It’s far more impactful to use that moment to explain why a different approach is better. This simple shift turns a potentially tense interaction into a huge learning opportunity.

Imagine a senior dev reviewing a junior dev's code in BugSmash. Instead of just flagging an inefficient query, they could add a comment explaining the performance hit and linking to an internal wiki on database optimization. It’s a small act that, over time, levels up the entire team.

This approach pays off in a big way:

- Faster Skill Development: Junior talent grows exponentially when they get constructive, contextual feedback on their actual work.

- Stronger Team Bonds: It fosters a collaborative, supportive vibe where people feel safe asking for help.

- Better Code Consistency: Mentoring is one of the best ways to standardize best practices across the whole engineering org.

Implement Blameless Post-Mortems

Sooner or later, a critical bug will slip through and affect users. It’s inevitable. How your team responds in that moment defines your culture. The best organizations don’t point fingers; they practice blameless post-mortems. The goal isn’t to find someone to blame, but to dig into the systemic weaknesses that let the issue happen in the first place.

The focus is always on the "what" and "how," never the "who."

The only question that matters is, "What can we improve in our process to prevent this entire class of problem from happening again?" This mindset moves the conversation from individual fault to collective strength.

For example, maybe a deployment fails because of a manual step in the release process. The outcome isn’t to discipline the person who made a mistake. Instead, the team might create an automation rule in BugSmash to handle that step from now on, making the system more robust for everyone.

Connect Quality Metrics to Team Goals

For quality to become a shared responsibility, it needs to be a visible and celebrated part of your team’s goals. Numbers on a forgotten dashboard are easy to ignore. But when those numbers are tied directly to team objectives—and maybe even performance reviews—they suddenly carry a lot more weight.

This isn’t about penalizing teams for every bug. It’s about rewarding improvements in key quality indicators. You could set a quarterly goal to cut the bug escape rate by 15% or slash the review cycle time for critical features. When you celebrate these wins, you're reinforcing the idea that quality is a direct contributor to the company’s success. For more on this, check out this fantastic piece on building a culture of excellence.

Weave User Feedback Into Every Review

At the end of the day, the ultimate measure of quality is whether your customers are happy. A world-class process finds ways to pipe the voice of the user directly into daily work. With a tool like BugSmash, it's easy to pull customer-reported issues from support tickets or feedback forums and turn them into reviewable items.

There’s nothing quite like seeing a bug ticket that includes a direct quote or screen recording from a frustrated user. It makes the problem real and urgent. This direct line of sight ensures your team isn't just building features that work on a technical level; they’re building a product people genuinely love to use. Our guide on quality assurance best practices dives deeper into elevating your standards with the user in mind.

Common Questions About The Quality Review Process

Even with a rock-solid framework, rolling out a new or updated quality review process is going to stir up some questions. And that's a good thing. It means your team is engaged. Addressing these concerns honestly and directly is the only way to get real buy-in and make the transition stick.

Let's dive into some of the most common questions I hear from teams on the ground.

How Do We Get Team Buy-In Without Forcing It?

You can’t. The fastest way to guarantee a new process fails is to make it a top-down mandate. Nobody likes being told what to do. Instead of forcing it, you need to sell it.

Start by showing your team exactly how this new process makes their lives easier. Use the data from your pilot project to build a clear "before and after" picture. For example, you could show how using BugSmash cut a project's back-and-forth review time by 30% or killed a confusing, multi-hour email thread.

When people see concrete proof that a change reduces their personal frustration, buy-in stops being something you have to chase. It becomes the natural next step.

What’s The Best Way To Handle Disagreements?

Let's be real—feedback can sting, and disagreements between reviewers are going to happen. The trick is to have a system that removes the personal element from the debate.

This is where your predefined quality standards and checklists become your best friend. When two people disagree, the conversation shouldn't be about who is "right" or "wrong." It should be about which approach best hits the project goals.

Designate a final approver—maybe a product manager or team lead—to be the objective tie-breaker. Their role isn't to pick a side, but to make the final call based on the framework everyone already agreed on. This keeps the focus squarely on the product, not on personalities.

A strong quality review process provides a neutral ground for debate. It turns potential conflicts over opinions into productive discussions about meeting shared, objective standards. This shift is what prevents friction and keeps collaboration healthy.

How Can We Prove The ROI Of This Effort?

Putting time and energy into a better quality review process has to pay off, and you need the numbers to prove it. The good news is, you're probably already collecting the data you need. To make your case, focus on three core metrics:

- Efficiency Gains: How many hours are you saving on rework and manual follow-ups? Track it.

- Quality Improvements: Show a measurable drop in the bug escape rate. Fewer issues reaching customers is a huge win.

- Faster Delivery: Highlight how much you've shortened the overall project cycle time.

Proving value through data is a universal principle. Look at how the UN approaches its 2025 Comprehensive Review for Sustainable Development Goals. For an indicator to even be considered, its data must cover at least 40% of relevant countries and populations. This global standard shows just how critical robust quality principles are for ensuring data integrity and demonstrating value. You can dive deeper into their methodology in the full report.

When you can walk into a meeting with clear, data-backed improvements, you’re not just justifying the effort. You’re proving that a great quality review process is a powerful engine for business growth.

Ready to stop chasing feedback and start building a world-class quality culture? BugSmash provides the central hub you need to design, implement, and optimize your entire review workflow. Sign up for free and transform your process today!